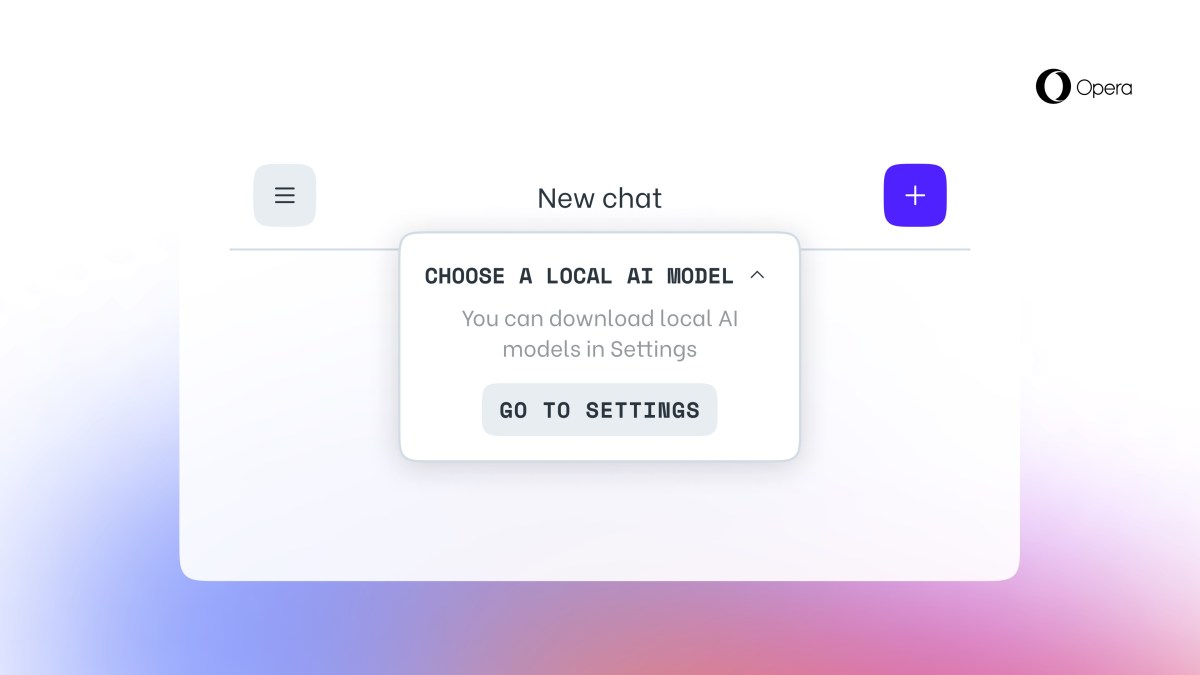

Opera, a leading web browser company, has announced a new feature that allows users to download and use Large Language Models (LLMs) directly on their computers. This feature is initially rolling out to Opera One users who receive developer stream updates, giving them access to over 150 models from more than 50 families.

These models include Llama from Meta, Gemma from Google, and Vicuna, among others. The feature is part of Opera’s AI Feature Drops Program, providing users with early access to cutting-edge AI features.

Opera is leveraging the Ollama open-source framework within the browser to run these models locally on users’ computers. Currently, the available models are a subset of Ollama’s library, with plans to incorporate models from various sources in the future.

Image Credits: Opera

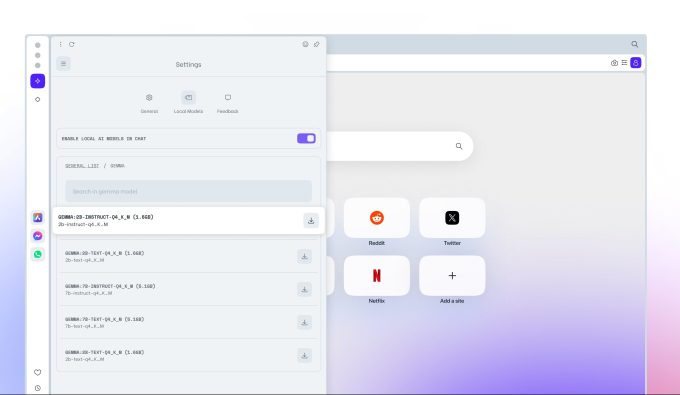

Each variant of the model occupies more than 2GB of space on the user’s local system. It is recommended to manage available storage space effectively to prevent storage depletion. Notably, Opera does not optimize storage usage during model downloads.

Jan Standal, VP of Opera, stated, “Opera is now offering users access to a wide selection of 3rd party local LLMs directly within the browser. It is expected that these models may decrease in size as they become more specialized for specific tasks.”

Image Credits: Opera

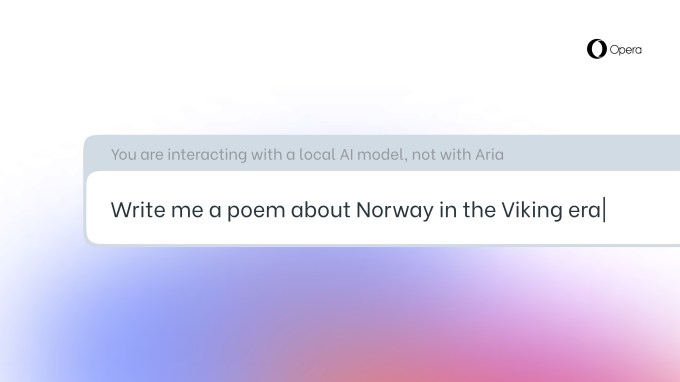

This feature is beneficial for users interested in testing various models locally. However, if space optimization is a concern, there are online tools like Quora’s Poe and HuggingFace’s HuggingChat that offer exploration of different models without local downloads.

Opera has been actively integrating AI-powered features since last year, with the introduction of the Aria assistant in the browser’s sidebar. Subsequent updates brought Aria to the iOS version. In response to the EU’s Digital Market Acts (DMA) urging Apple to loosen restrictions on browser engines, Opera announced the development of an AI-powered browser with its proprietary engine for iOS.