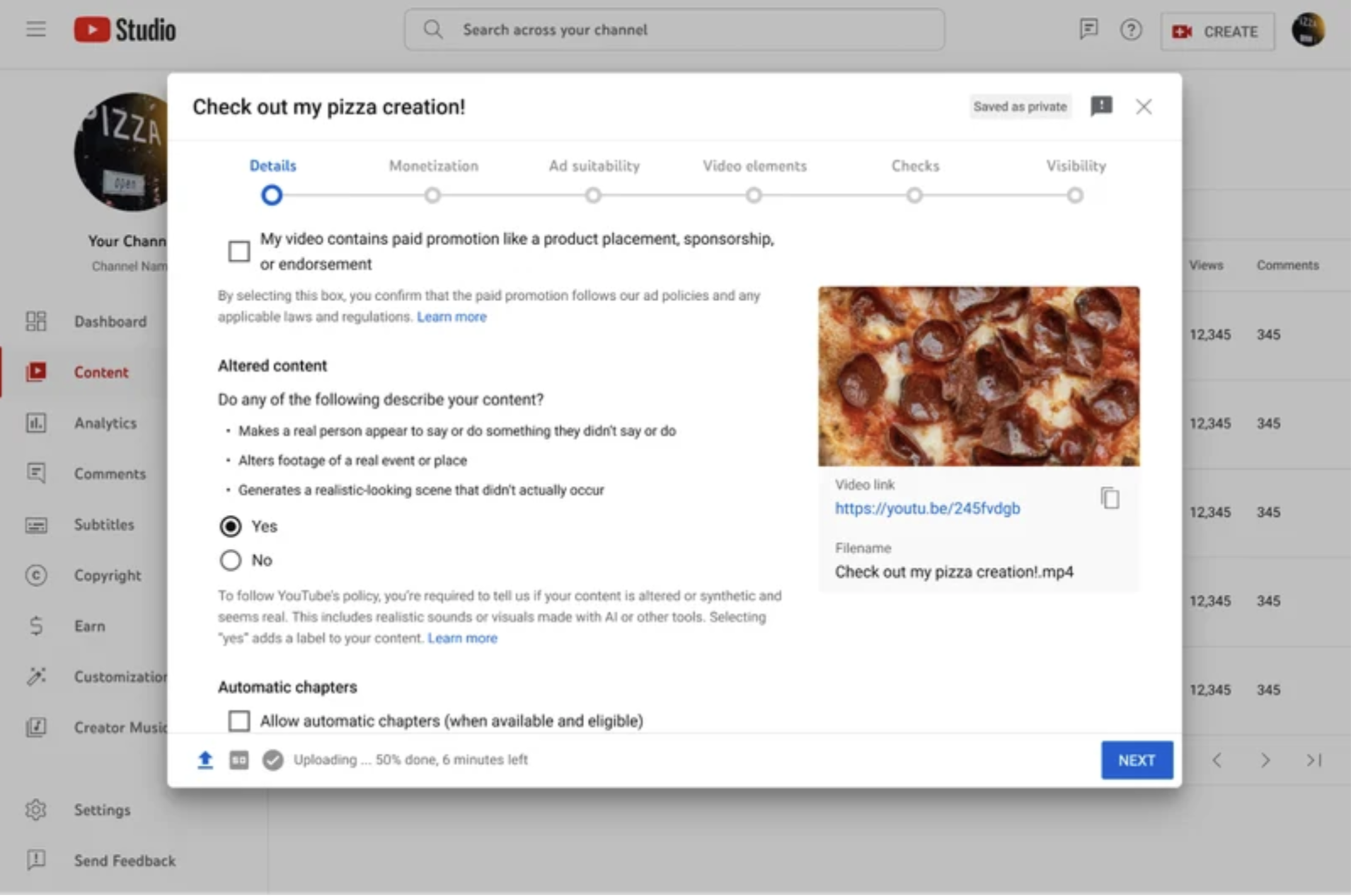

YouTube has announced that creators will now be required to disclose when realistic content was created using AI. The platform is introducing a new tool in Creator Studio to ensure transparency when content that could be mistaken for real is generated with altered or synthetic media, including generative AI.

These new disclosures aim to prevent viewers from being misled by synthetically-created videos passing off as real, given the increasing sophistication of generative AI tools. With concerns raised by experts about AI and deepfakes posing risks during future U.S. presidential elections, YouTube’s move serves as a proactive measure.

This announcement aligns with YouTube’s previous commitment in November to introduce updated AI policies. The policy specifically requires creators to disclose content that could deceive viewers into believing it is real, without mandating disclosure for content that is clearly unrealistic or animated.

Image Credits: YouTube

YouTube’s focus is primarily on videos featuring realistic individuals or events that have been digitally altered or synthesized. Creators must disclose manipulations like face replacements or synthetic voiceovers, as well as altered footage of real events, places, or fictional scenarios.

Most videos will display a label in the expanded description, with more sensitive topics like health or news receiving a prominent label directly on the video. The rollout of these labels will begin on the YouTube mobile app and later extend to desktop and TV formats.

To ensure compliance, YouTube plans to enforce measures for creators who consistently fail to use the required labels. In cases where creators omit labels, especially if the content risks misleading viewers, YouTube may add the labels itself.